How to analyze your Teachable performances with Cloud Run Function, Big Query and Looker Studio?

Need to analyze your Teachable performances? Using Cloud Run Functions, Big Query & Looker Studio can help. Not easy, but damn powerful!!

Are you the proud owner of a Teachable website, providing educational content to thousands of people around the world? Well, that’s quite an exciting business. But do you know how users consume your content, or if a given product is working as expected? Well, Teachable does offer some insights, but here we’ll show you how to go in-depth and analyze your Teachable performances with Cloud Run Function, Big Query and Looker Studio.

Spoiler: you’ll need to have some SQL, programming and coding skills to retrieve your data through the Teachable API using Cloud Run Functions, create tables in Big Query and eventually display data in Looker Studio. Or, you can just contact us, we’ll be glad to help :)

Top Looker Studio connectors we love and use on a daily basis (all with free trials): PMA - Windsor - Supermetrics - Catchr - Funnel - Dataslayer. Reviews here and there.

Not sure which one to pick? Have a question? Need a pro to get a project done? Contact us on LinkedIn or by e-mail, and we’ll clear up any doubt you might have.

Looking for Looker Studio courses? We don’t have any… but you can check Udemy!

Create a new Google Cloud project and set-up billing

First, please proceed to the Google Cloud Platform and create a new project. You’ll be asked to provide a credit card number to enable billing (no worries here, if your code is well written, it’ll only cost you a few cents a month, plus there is a first-time bonus to play with).

We’ll also need to enable a few APIs: Google Cloud Storage and Google Cloud Run Function to retrieve your data using the Teachable API, Google Big Query for storing and transforming your data, and Google Cloud Scheduler to define at which frequency your data gets updated. No worries here, if you follow the steps carefully, Google will prompt you with a pop-up each time you need to enable APIs and/or grant authorization, so let’s move on.

Create a Google Cloud Storage bucket

Go to Google Cloud Storage, and create a new bucket in the region of your choice (try to choose one and stick with it through all the process). No need to change any parameter here.

Create your first Google Cloud Run Function

Then, let’s go to the Cloud Run Function section and create your first function. Here, we’ll retrieve all the courses. In order to do so, you’ll need to have your Teachable API key at hand.

Please create a function, give it a name and select a region (same as previous). As per the Trigger, select Cloud Pub/Sub, and create a new topic called courses. Under additional settings, increase the time out to 540 seconds, and then click Next.

On the Code page, please select Python 3.12 as the Runtime, and copy the following piece of code in Main.py (entry point at the top should be main as well):

import requests

import pandas as pd

from google.cloud import storage

def get_courses():

headers = {

"accept": "application/json",

"apiKey": "REPLACE WITH YOUR API KEY"

}

all_courses = []

page = 1

more_pages = True

while more_pages:

url = f"https://developers.teachable.com/v1/courses?page={page}&per=100"

response = requests.get(url, headers=headers)

data= response.json()

courses = data['courses']

all_courses.extend(courses)

if page < int(data.get('meta',{})['number_of_pages']):

page += 1

else:

more_pages = False

break

return all_courses

def export_courses():

courses = get_courses()

df = pd.DataFrame(courses)

client = storage.Client(project='YOUR PROJECT NAME')

bucket = client.get_bucket('YOUR CLOUD STORAGE BUCKET NAME')

blob = bucket.blob('courses.csv')

blob.upload_from_string(df.to_csv(index = False),content_type = 'csv')

def main(data,context):

export_courses()Basically, this piece of code will connect to the Teachable API end point called courses, retrieve all data, and eventually store it in a csv file called courses.csv in your Cloud Storage Bucket.

In requirements.txt, copy this piece:

pandas>=1.22

google-cloud-storage>=1.36

requests>=2.27We’re just telling which versions Google should use to run the code. Save, let the functions build, and move on to the Cloud Scheduler part.

Create a new Scheduler to define at which frequency your data will get updated

Here, it’s pretty straight-forward. Create a new job, give it a name, select the same region as previously, define the CRON to specify at what time your code will run (something like this: 0 16 * * *. Runs everyday at 4:00PM for instance), and select your timezone.

Target type should be set to Pub/sub, select the topic you created previously, and then put anything in the Message body, won’t have any impact. Create your job, and we’re done here.

At this point, we’re retrieving courses data from the Teachable API once a day at 4PM and put it in a csv file located in the Google Cloud Storage bucket, not bad right?

Using the Teachable API documentation, you’ll have to create several functions to retrieve all the data you’re interested in (users, pricing plans, transactions, …), so you end up with all your teachable data located in your bucket in different csv files.

Retrieve the data in Big Query

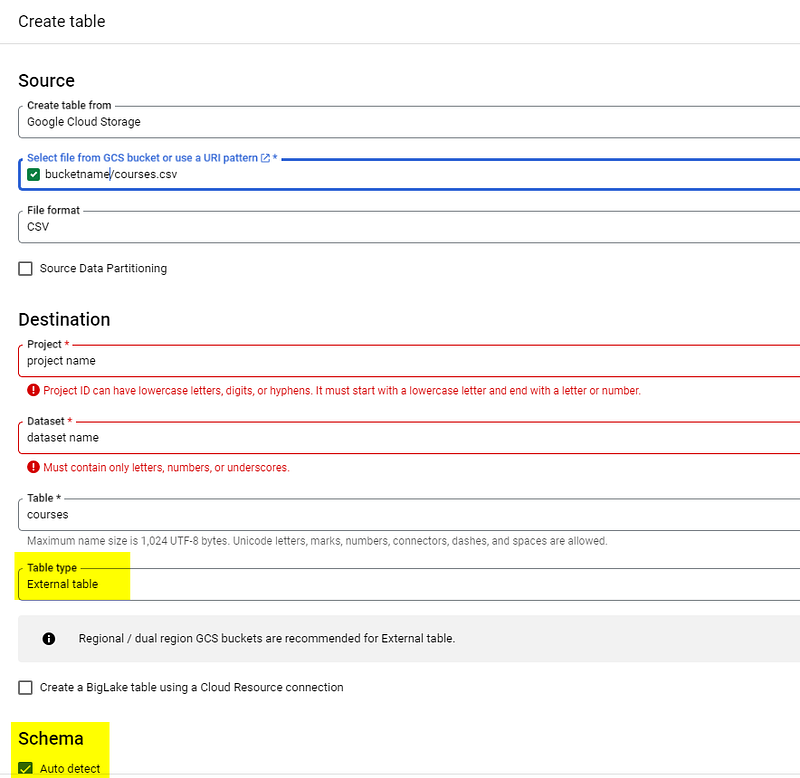

We’re almost there. Once you’ve created your csv files, we’ll need to make them accessible in Big Query. Quite easy in all fairness. Please proceed to Big Query, create a new dataset, and then create a new table (always clicking on the 3 dots next to your project name / dataset name). Make sure you select External as Table type, and that you set the Schema to Auto detect:

In Advanced options, remember to skip first row as it contains header, and you’re good to go, your data is in Big Query!

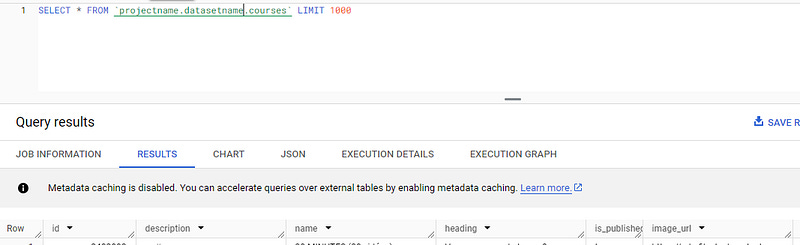

Directly send tables to Looker Studio, or write SQL queries to transform it before sending it

We’ve done the big part. Now, all you need to do is create a new Looker Studio report, and either create Big Query data sources pointing to the tables you’ve just created, or write advanced SQL queries to start transforming your data before sending it to Looker Studio.

You’re very welcome :) And that’s all folks.

PROBLEM SOLVED !

Top Looker Studio connectors we love and use on a daily basis (all with free trials): PMA - Windsor - Supermetrics - Catchr - Funnel - Dataslayer. Reviews here and there.

Not sure which one to pick? Have a question? Need a pro to get a project done? Contact us on LinkedIn or by e-mail, and we’ll clear up any doubt you might have.

Looking for Looker Studio courses? We don’t have any… but you can check Udemy!

Communicate and browse privately. Check Proton Mail and Proton VPN

Website hosted by Tropical Server